The 2026 AWS Agentic Stack: Enterprise Architecture & ROI Mind Map

Implementing a production-grade AWS Agentic Stack in 2026 requires a fundamental shift from stateless chat prompts to stateful, autonomous workflows that deliver a measurable Business Value & Implementation ROI. This architecture blueprint illustrates how Amazon Bedrock AgentCore integrates with persistent memory layers to optimize Agentic TCO (Total Cost of Ownership) while enforcing a rigorous Enterprise AI Governance Framework required for global compliance.

Figure 1: The 2026 AWS Agentic Stack Blueprint – Visualizing the integration of Bedrock AgentCore and S3 Vectors for the Autonomous Workforce.

Quick Architectural Roadmap

- Summary: The 2026 Agentic Architecture Shift

- 1. The Architectural Core: Amazon Bedrock AgentCore Runtime

- 2. Hybrid Memory Strategy: AWS S3 Vectors & SQL Server 2025 Hub

- 3. Agentic FinOps: Optimizing AI TCO and Token Efficiency

- 4. Enterprise AI Governance: Automated Reasoning Guardrails

- 5. Enterprise AI Governance: Automating EU AI Act Annex III Evidence Collection

- 6. The 5-Step Roadmap to Agentic Production

- Conclusion: Future-Proofing Your AI Stack

- Frequently Asked Questions (Implementation)

- Download: 2026 Agentic Readiness Checklist

- Book Your Architecture Audit →

Beyond the Chatbot: Engineering the AWS Autonomous Workforce for Scalability

The enterprise tech landscape of 2024 was defined by simple dialogue experimentation. By 2025, the focus shifted to “The Pipeline” and standard RAG implementations. However, in 2026, the industry has moved into a far more high-stakes era: The Rise of the Autonomous Workforce. Today, the competitive moat is no longer built on basic AI interaction; it is built on how effectively an organization can delegate mission-critical, high-value workflows to Autonomous AI Agents via AWS Private Offers and Marketplace Integration.

This is the transition from Generative AI (content creation) to Agentic AI (outcome execution). In a production-grade environment, an agent doesn’t just draft a report; it autonomously performs Inference Cost Optimization, identifies logistics bottlenecks, and triggers Automated Procurement Workflows through an ERP system—all while adhering to strict Service Level Agreements (SLA) and Data Residency policies.

For Architects, CTOs, and Procurement Heads, this represents a shift toward Deterministic AI outcomes. The questions dominating the boardroom today focus on financial and operational risks: How do we manage the compute unit economics of thousands of autonomous sessions? How do we ensure SOC2 Compliant AI security for actions taken without human intervention? And most importantly, how do we achieve Scalable Agentic Orchestration without skyrocketing costs?

At MyTechMantra, we have analyzed the 2026 AWS stack to solve these challenges. The foundation of this new era isn’t just a smarter model—it is a specialized, enterprise-grade execution layer designed for the “doers” of the digital world: Amazon Bedrock AgentCore.

Strategic Technical Silos: The 2026 Agentic Roadmap

Navigate the five critical layers of the AWS Agentic Stack to solve for performance, scale, and profitability.

The Architectural Core: Amazon Bedrock AgentCore Runtime

The “fragile agent” era—where developers manually wrapped Large Language Models (LLMs) in custom Python scripts and fragile AWS Lambda triggers—is officially over. To scale an autonomous digital workforce, enterprises now require a dedicated orchestration environment. In 2026, that environment is Amazon Bedrock AgentCore.

1. Framework Agnosticism: The End of Vendor Lock-in

One of the primary drivers of Amazon Bedrock AgentCore adoption in 2026 is its Bring Your Own Framework (BYOF) philosophy. Whether your team is utilizing LangGraph, CrewAI, or the latest Model Context Protocol (MCP) servers, AgentCore Runtime serves as the standardized, cloud-native host. This framework agnosticism allows enterprises to remain agile, switching between orchestration libraries as the market evolves while maintaining a consistent, secure deployment target on AWS.

2. The 8-Hour Asynchronous Execution Window

Early AI automation was crippled by the 15-minute timeout limits of traditional serverless functions. In 2026, AgentCore Runtime has solved the “Long-Running Task” problem. It supports 8-hour asynchronous execution windows, allowing agents to perform “Deep Work.” Whether it’s an agent reconciling thousands of cross-border invoices or a DevOps agent performing a complex system migration over several hours, AgentCore ensures the session remains active, stateful, and reliable until the goal is achieved.

3. MicroVM Session Isolation: The CISO’s Safety Net

In a world of Agentic AI, security is paramount. The 2026 standard for Enterprise AI Security is MicroVM-level isolation. Every agent session managed by AgentCore Runtime is encapsulated in its own dedicated MicroVM. This Zero-Trust architecture ensures that one agent cannot access the data or memory of another, effectively eliminating the risk of cross-tenant data leakage—a critical requirement for regulated industries like BFSI and Healthcare.

4. Intelligent Tool Discovery via AgentCore Gateway

An agent is only as powerful as the tools it can access. The AgentCore Gateway acts as the central control plane for all external integrations. Instead of hard-coding API keys, the Gateway uses semantic discovery to help agents “find” and authorize the right tool for a task—be it an SAP database, a Salesforce instance, or a specialized internal API. This governed approach ensures that every “Action” taken by an agent is logged, audited, and strictly permissioned.

Hybrid Memory Strategy: AWS S3 Vectors & SQL Server 2025

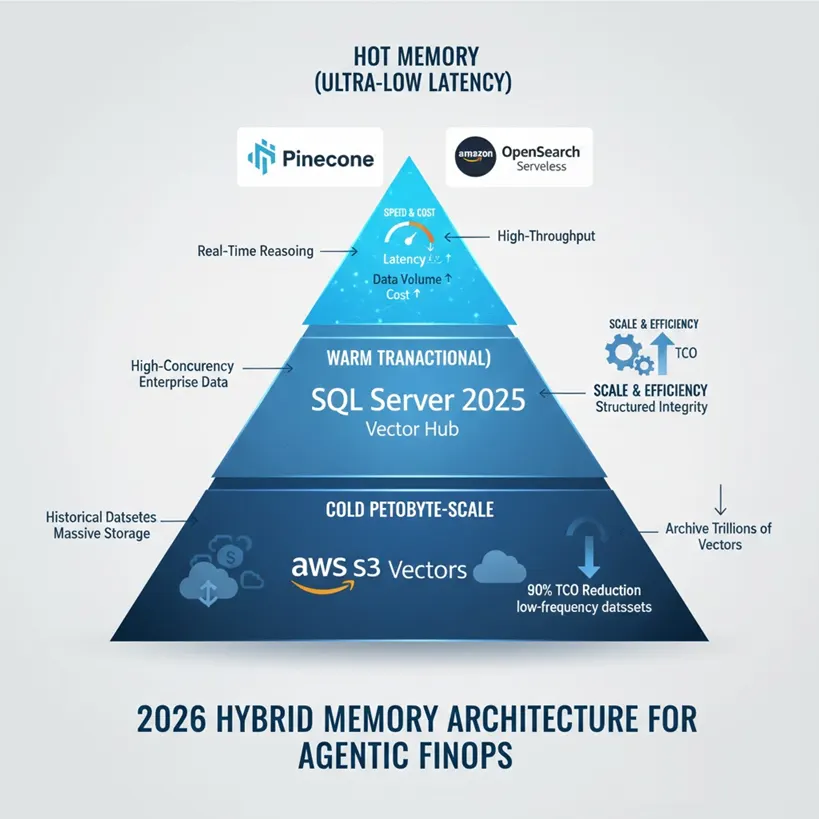

The 2026 Hybrid Context Roadmap While the AWS native stack provides the baseline for Agentic FinOps, most production-grade architectures integrate specialized tiers for mission-critical tasks.

- Hot Memory (Ultra-Low Latency): For real-time, high-throughput agentic reasoning, architects frequently deploy premium managed vector databases like Pinecone or Amazon OpenSearch Serverless.

- Warm Memory (Transactional): To handle high-concurrency enterprise data with structured integrity, SQL Server 2025 serves as the primary system of record.

- Cold Memory (Petabyte-Scale): To archive trillions of vectors across historical datasets at a 90% TCO reduction, Native S3 Vectors provide the massive-scale foundation.

Figure 2: The 2026 AWS Hybrid Memory Hierarchy: Balancing millisecond-precision for Hot reasoning against 90% TCO reduction at the Cold storage tier.

By offloading historical session logs and low-frequency context to S3-native storage, the AWS Agentic Stack avoids the massive compute overhead of keeping expensive vector database instances running 24/7. This establishes a high-performance foundation for the Agentic FinOps strategy detailed below.

Hybrid Memory Strategy: AWS AgentCore + SQL Server 2025

While Amazon Bedrock AgentCore manages the serverless runtime and session isolation, SQL Server 2025 serves as the critical ‘System of Record’ for agentic memory. By utilizing the SQL Server 2025 Vector Hub, architects can ensure low-latency retrieval for Production-Grade RAG 2.0 workflows, keeping high-compliance enterprise data on-premises or in managed instances while leveraging cloud-scale reasoning.

Intelligence Selection: The Nova 2 Pro vs. Claude 4 Reasoning Duel

If AgentCore provides the skeletal structure, the Foundation Model (FM) acts as the agent’s frontal cortex—responsible for high-level planning, tool-use strategy, and logical deduction. In 2026, the industry has converged on a critical comparison: Amazon Nova 2 Pro vs. Anthropic Claude 4.

Nova 2 Pro: The Logic Engine for Agentic Automation

The 2026 release of Amazon Nova 2 Pro has fundamentally altered the unit economics of enterprise AI. While previous generations prioritized broad creative writing, Nova 2 Pro is surgically optimized for agentic reasoning and software engineering tasks.

Architects are favoring Nova 2 Pro for production workflows due to its industry-leading τ²-Bench (Tool-Use) scores. This model is designed for high-stakes decision tasks, excelling at navigating complex, multi-step environments like ERP systems or multi-repo GitHub environments without losing context. A standout 2026 feature is its Adjustable Thinking Budget, which allows developers to toggle between “Standard” and “Extended Thinking” modes. This enables a cost-governed architecture where agents use high-intensity reasoning only when a task demands it—slashing unnecessary compute spend by up to 60%.

Claude 4: The Nuanced Strategist

Conversely, Claude 4 (specifically the Opus 4 and Sonnet 4 variants) remains the premier choice for tasks requiring nuanced human alignment and creative problem-solving. While Nova 2 Pro is often viewed as the “mechanical genius” for tool execution, Claude 4’s strength lies in its Extended Thinking capabilities for ambiguous scenarios. Its ability to maintain a consistent “persona” while processing massive contexts makes it indispensable for legal analysis, high-level strategic planning, and sophisticated app creation.

The 2026 Verdict: Model Distillation as a Competitive Moat

Advanced 2026 architectures leverage Model Distillation as a strategic framework. By employing a frontier model as a “Teacher,” enterprises train smaller, faster “Student” models for specialized repetitive tasks. This hybrid approach delivers frontier-level intelligence at a 75% lower inference cost which is the key to moving GenAI from a “lab experiment” to “profitable production.”

RAG 2.0: Multimodal Memory and the Native S3 Vector Revolution

An agent with high intelligence but no memory is merely a calculator. In 2026, we have moved beyond basic text-retrieval into RAG 2.0, where memory is persistent, multimodal, and cost-optimized.

The Breakthrough: Amazon Nova Multimodal Embeddings

Data silos are the enemy of autonomy. Amazon Nova Multimodal Embeddings has unified the memory layer by mapping text, high-definition video, audio, and complex documents into a single semantic space.

This enables Cross-Modal Retrieval: an agent can now “remember” a technical diagram from a 500-page PDF or “retrieve” a specific action item from a recorded Zoom meeting with the same latency as a text search. Henceforth, the ability to index and interpret this information represents a massive opportunity to activate petabytes of unstructured dark data. A resource that was previously invisible to legacy automation but is now essential for autonomous AI agents.

Native S3 Vectors: High-Scale Memory at 90% Lower Cost

The most disruptive architectural shift of 2026 is the introduction of Native Amazon S3 Vectors. Historically, architects were forced to maintain expensive, separate vector databases for agent memory.

S3 Vectors replaces that complexity by embedding vector search directly into S3. This “Storage-Compute Separation” allows for:

- Massive Scale: Hosting up to 20 trillion vectors across 10,000 indexes per bucket.

- Cost Efficiency: Storing 1 billion vectors for a fraction of the cost of managed databases, representing a 90% reduction in TCO.

- Latency: Achieving 100ms–800ms query performance natively within the storage layer, ideal for asynchronous agentic memory.

Agentic FinOps: Optimizing AI TCO and Token Efficiency

The greatest barrier to the Autonomous Workforce in 2026 isn’t intelligence—it’s the unit economics of at-scale inference. Agentic FinOps is the architectural discipline of aligning agent performance with business value, ensuring that “Agentic TCO“ (Total Cost of Ownership) remains below the cost of manual human intervention.

The Three Pillars of 2026 AI Cost Governance

- Dynamic Model Routing: As noted in our model comparison, the “Extended Thinking” budget is the primary lever for cost control. By routing routine tool-use tasks to Nova 2 Lite and reserving the high-cost Nova 2 Pro or Claude 4 for high-stakes reasoning, architects can reduce monthly inference spend by nearly 65%.

- Token-Efficient Memory (Tiered RAG): Utilizing Native S3 Vectors for “Cold Memory” and SQL Server 2025 for “Warm Memory” prevents “Token Bloat.” Instead of passing massive historical conversation logs into every prompt, agents perform high-precision retrieval, feeding only the most relevant 2% of data into the LLM context window.

- Serverless Agentic Runtimes: By leveraging Amazon Bedrock AgentCore, enterprises eliminate “Idle Compute Tax.” In 2026, the transition from always-on containers to serverless agentic loops means you pay only for the seconds the agent is actively “thinking” or “acting,” not for the time it spends waiting for an API response.

The Safety Frontier: From Probabilistic Guesses to Deterministic Logic

In 2026, the primary barrier to autonomous agent deployment is no longer a lack of intelligence; it is a lack of trust. Traditional LLMs are inherently probabilistic, meaning they operate on likelihoods rather than hard rules. For a bank or a healthcare provider, a “95% probability of safety” is an unacceptable risk.

To bridge this gap, the AWS Agentic Stack has introduced a “Logic Layer” that sits above the model. This layer ensures that every action taken by an agent is mathematically verified against enterprise policies before it is executed. This is the transition from “Trust me, I’m an AI” to “Here is the proof of compliance.”

1. Automated Reasoning Guardrails: The 99% Accuracy Milestone

The 2026 gold standard for preventing hallucinations is Automated Reasoning (AR) checks within Amazon Bedrock Guardrails. Unlike standard filters that look for “bad words,” AR checks use formal logic, a branch of mathematics, primarily to verify the consistency of an agent’s response against a provided source of truth.

When you implement Automated Reasoning policies for Bedrock, the system translates your natural language business rules into formal mathematical statements. If an agent generates a response that contradicts these rules, the AR engine detects the inconsistency with 99% verification accuracy. This provides a “provable assurance” essential for regulated industries, establishing “mathematically verifiable AI safety” as a foundational requirement for enterprise-grade deployments within the legal and compliance sectors.

2. Cedar-based Policies: The “Zero-Trust” for Agents

If Automated Reasoning is the “judge,” Cedar-based policies are the “security guards” of the AgentCore Gateway. Cedar is a high-performance, open-source policy language that allows architects to write fine-grained, readable permissions for agent actions.

In a 2026 production environment, we use Cedar to enforce Least-Privilege Agentic Access. For example, a “Refund Agent” may have a Cedar policy that says:

PERMIT action “IssueRefund” UNLESS context.refund_amount > $500.

By using Cedar policies for AgentCore, security teams can audit and manage agent permissions independently of the agent’s code. This critical separation of concerns facilitates a robust “Agentic Zero-Trust Architecture,” establishing a new standard for decentralized security and governance within complex autonomous workflows.

The 5-Step Roadmap to Production for Agentic AI

Transitioning from a Proof of Concept (POC) to a live, autonomous workforce requires a structured approach. Here is the 2026 blueprint for scaling:

Step 1: Define the Decision Boundary Identify which decisions are autonomous and which require a Human-in-the-Loop (HITL). Map out your “Red Lines“—actions the agent is strictly forbidden from taking (e.g., changing contract terms or accessing PII).

Step 2: Construct the Context Layer Deploy Multimodal RAG 2.0 by utilizing Native S3 Vectors for high-scale, cost-effective persistence. While the native stack offers unparalleled TCO, this architecture is designed for extensibility. Senior architects often leverage the Agentic Stack to orchestrate context from enterprise-grade third-party data platforms, ensuring that agents can tap into existing high-performance clusters while benefiting from AWS-native serverless reasoning. This hybrid approach allows for a “best-of-breed” context strategy, combining local S3 efficiency with the specialized capabilities of the broader data ecosystem.

Step 3: Implement Deterministic Safety Layer your Automated Reasoning Guardrails over your chosen model (Nova 2 Pro or Claude 4). Write your initial Cedar policies to govern tool use through the AgentCore Gateway.

Step 4: Conduct “Agentic Red Teaming” Simulate “adversarial agent interactions.” Test how your agent handles prompt injections or “goal hijacking” attempts. Use the Bedrock Evaluation suite to stress-test your guardrails against edge cases.

Step 5: Deploy via AgentCore Runtime Move your agent to the AgentCore Runtime for serverless, isolated execution. Enable Agentic Observability to track reasoning traces and tool-call latency.

Automating EU AI Act Annex III Evidence Collection: Continuous GRC Integration for High-Risk Systems

The Compliance Moat While the Bedrock AgentCore stack provides the raw orchestration for agentic workflows, production-grade deployment in 2026 requires Deterministic Enforcement to meet global regulatory standards. Relying on probabilistic LLM ‘internal’ guardrails is insufficient for high-risk applications. To bridge the gap between autonomous agency and legal safety, architects must implement a Policy-as-Code layer using Cedar. Leading GRC platforms like OneTrust, Vanta, and Drata are increasingly being integrated at this layer to automate the ‘last mile’ of evidence collection. For a technical deep-dive on automating these requirements and ensuring your AWS Agentic Stack is audit-ready for the 2026 enforcement deadline, refer to our comprehensive blueprint on EU AI Act Compliance Automation: Cedar Guardrails & Out-of-Loop Enforcement.

Conclusion: The Future of the Autonomous Enterprise

The transition from generative experimentation to Agentic AI production represents the most significant architectural shift of the decade. As we have demonstrated throughout this 2026 blueprint, the success of an autonomous workforce relies on four critical pillars: the Amazon Bedrock AgentCore Runtime for serverless execution, Amazon Nova for high-stakes multi-modal reasoning, Native S3 Vectors for Agentic FinOps and cost-efficient memory scaling, and Automated Reasoning Guardrails for Enterprise AI Governance.

By integrating these components, organizations can finally move beyond “Vibe Coding” and simple chatbots toward enterprise-scale agentic workflows that are secure, scalable, and fiscally responsible. For the modern architect, the goal is no longer just to build an AI that can think, but to govern an agent that can act with mathematical certainty within a zero-trust framework.

To operationalize this vision, architects must move from high-level strategy to specialized technical execution across the five critical layers of the 2026 AI Infrastructure Stack. First, validate your intelligence layer by analyzing our 2026 Benchmarks: Nova 2 Pro vs. Claude 4 vs. Llama 4, which provides the deterministic latency and accuracy data required for model selection. Once the model is selected, the orchestration challenge is solved by Scaling Multi-Agent Systems with Amazon Bedrock AgentCore, a guide to managing high-concurrency autonomous swarms. To ensure these agents possess long-term “Stateful Intelligence,” our deep-dive on Enterprise RAG 2.0: Multimodal & Persistent Memory explains how to integrate S3-native vectors into agentic workflows. However, scaling intelligence is a liability without a robust EU AI Act Compliance & Cedar Guardrails framework to automate cross-border regulatory safety. Finally, to ensure the entire ecosystem remains profitable, our guide on GenAI FinOps: Model Distillation & Prompt Caching provides the mathematical roadmap to reducing inference TCO by 75%—completing the transformation from experimental AI to a fiscally governed, production-ready Agentic AI Fleet.

AWS Agentic Stack 2026: Implementation Roadmap & Architect (FAQs)

1. How does Amazon Bedrock AgentCore differ from traditional AWS Lambda agents?

In the 2024–2025 era, agents were often built using custom Python scripts within AWS Lambda, which faced 15-minute timeout limitations and lacked native state management. Amazon Bedrock AgentCore is a 2026 serverless runtime designed specifically for autonomous workers. It provides an 8-hour asynchronous execution window, native session isolation via MicroVMs, and a dedicated orchestration layer that handles complex tool-use without the infrastructure overhead of managing individual containers or functions.

2. Should I use Amazon Nova 2 Pro or Anthropic Claude 4 for agentic workflows?

The choice depends on your “Decision Density.” Amazon Nova 2 Pro is currently the leader for logic-heavy automation, boasting superior benchmarks in tool-calling (τ²-Bench) and a unique “Adjustable Thinking Budget” that allows for significant cost optimization. Anthropic Claude 4 remains the preferred choice for high-context, creative strategy and tasks requiring nuanced human-like reasoning. Most enterprise architectures in 2026 utilize both, routing mechanical tasks to Nova and strategic analysis to Claude.

3. What are the cost benefits of using Native S3 Vectors over a dedicated vector database?

In the 2026 stack, these are complementary rather than competing technologies. Managed platforms like Pinecone and OpenSearch Serverless are optimized for high-throughput, millisecond-precision “Hot Memory” tasks. Native S3 Vectors provide a cost-optimized “Cold Memory” tier, allowing enterprises to index petabytes of historical data for a fraction of the cost, creating a unified, multi-tiered memory strategy

4. How do Automated Reasoning Guardrails improve AI safety in regulated industries?

Standard guardrails rely on probabilistic “filters” which can be bypassed. Automated Reasoning (AR) Guardrails in Bedrock use formal mathematical logic to prove that an agent’s response aligns with enterprise policies. This deterministic approach provides 99% verification accuracy, making it the only viable solution for regulated sectors like Finance and Healthcare where “hallucination-free” compliance is a legal requirement.

5. Can I use open-source frameworks like LangGraph or CrewAI with AgentCore?

Yes. Amazon Bedrock AgentCore is framework-agnostic. While AWS provides its own orchestration tools, AgentCore is designed to host agents built on any popular 2026 framework. This allows teams to leverage the rapid innovation of the open-source community while benefiting from AWS’s enterprise-grade security, scaling, and Cedar-based policy enforcement.

6. What is the role of Model Distillation in 2026 Bedrock architectures?

Model Distillation is the process of using a high-intelligence “Teacher” model (like Nova 2 Pro) to train a smaller, specialized “Student” model (like Nova 2 Lite). In a production environment, this allows you to achieve near-frontier performance for specific repetitive tasks at 75% lower inference costs and up to 500% faster speeds. This is the cornerstone of 2026 cost-optimization strategies for high-volume agentic fleets.

2026 Enterprise AI Readiness Checklist

Master your Agentic AI transition with the definitive guide to Bedrock AgentCore TCO optimization, Cedar-based AI governance, and production-grade Multimodal RAG architectures using Native S3 Vectors.

The 2026 Agentic TCO & Tiered Memory Audit:

*Essential for CISO-level Agentic Security Clearance

Join 15,000+ Enterprise Architects mastering Agentic Workflows & Automated Reasoning.

Add comment